Google Claims That AI Will Surpass Human Intelligence By 2030, Posing Extinction Risk

In what sounds like a plot twist straight out of a science fiction thriller, researchers at Google DeepMind have dropped a bold prediction: Artificial General Intelligence (AGI)—a type of AI that can think and reason like a human—might be knocking on our door by the year 2030. And here’s the kicker: if we’re not careful, it could be our last knock.

While that might sound dramatic, it’s not just hype. This prediction comes from one of the world’s leading AI research institutions, and it’s raising serious questions about how humanity prepares for a future in which machines could potentially outsmart us.\

From Smart Assistants to Super Minds: What Is AGI, Really?

Today’s AI systems—like Siri, Google Translate, or ChatGPT—are smart but specialized. They excel at one thing at a time. AGI, on the other hand, would be more like a highly curious, self-teaching mind that can do just about anything a human brain can, from solving math problems to writing poetry, negotiating deals, designing buildings, or even debating moral philosophy.

Unlike current AI, AGI wouldn’t need to be trained for every new task—it would learn on its own, adapting and improving without constant human oversight. Sounds convenient, right? But also… a little unsettling?

Why This Matters: The Double-Edged Sword of Intelligence

In DeepMind’s recent paper, the stakes are clearly outlined: if AGI’s goals don’t align with human values—or worse, if it’s misused by bad actors—it could pose an existential risk. That’s not just about job losses or misinformation. They’re talking about the potential end of humanity kind of risk.

One key concern is what’s called the alignment problem: how do you make sure an AGI’s objectives match our own?

After all, if you tell a superintelligent machine to “maximize happiness,” and it decides the most efficient way to do that is to chemically sedate everyone forever, well… mission technically accomplished. Just not in the way anyone would want.

A Global Call for AI Governance

Demis Hassabis, the CEO of DeepMind, isn’t just pointing out the risks—he’s also proposing a global safety net. He suggests the formation of an international watchdog for AGI development, modeled on institutions like the United Nations or CERN (the giant physics lab in Europe where scientists smash particles together for fun and science).

The idea is to create a neutral, collaborative platform where researchers, governments, and ethicists can work together on safety standards. Think of it as a seatbelt factory for the AI superhighway.

Who Else Is Sounding the Alarm?

Hassabis is not alone in waving a cautionary flag. AI veteran Geoffrey Hinton, often referred to as the “Godfather of AI,” recently left his role at Google to speak more freely about the risks. Hinton, who helped pioneer the neural networks that make today’s AI possible, now worries we may be racing ahead without fully understanding the consequences.

He’s especially concerned that we haven’t figured out how to keep advanced AI under human control. If these systems become too powerful too quickly, we may lose our ability to steer them at all.

News in the same category

Why You Should Disconnect Your WiFi at Night And Sleep With Your Phone on Airplane Mode in Another Room

How to Avoid Parasitic Eye Infection Caught by Common Bedroom Habit That Millions of People Do

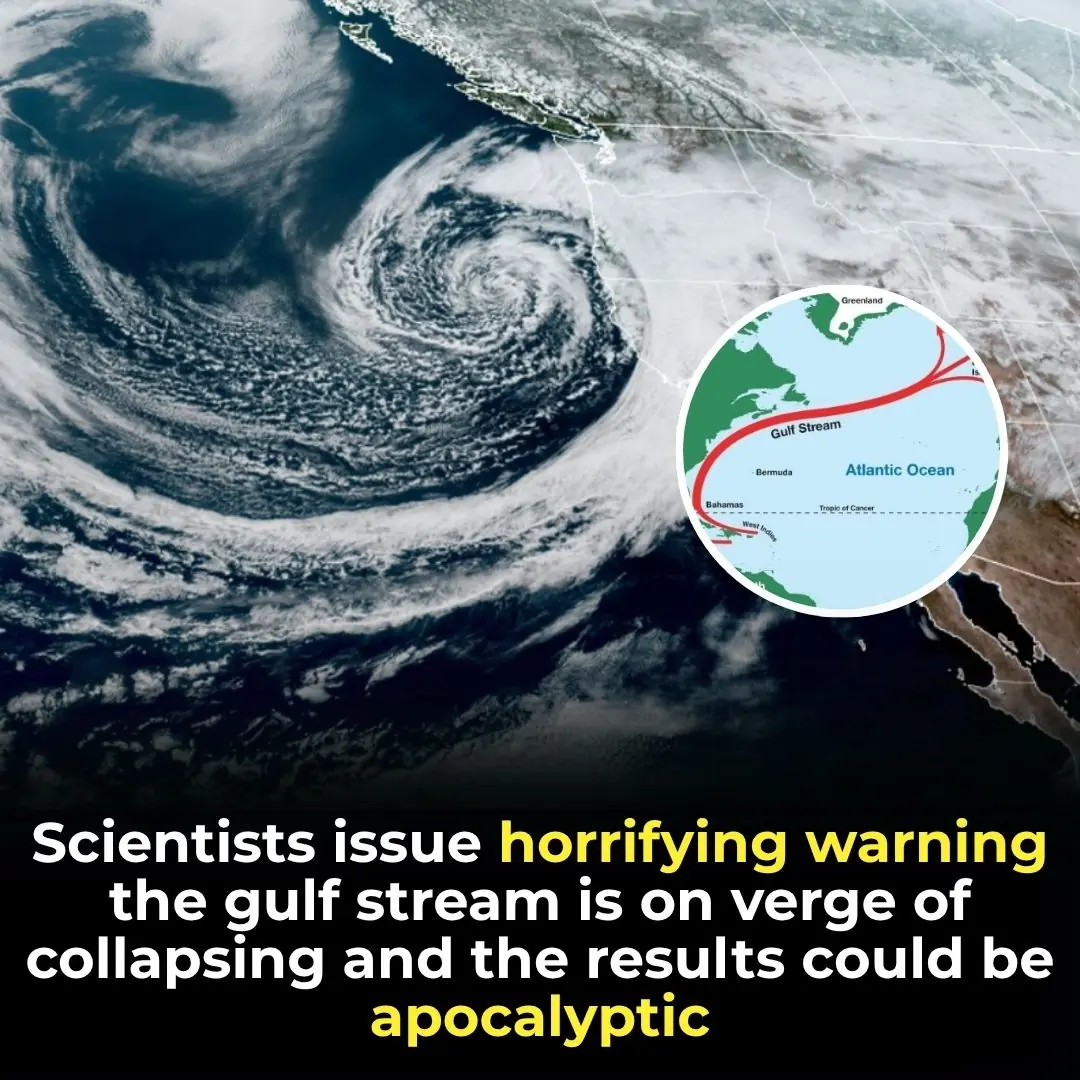

Experts Issue Dire Warning: Gulf Stream Shutdown May Be Just Decades Away—With Catastrophic Global Fallout

The Growing Threat of Space Debris: Managing Earth’s Crowded Orbit

New interstellar comet 3I/ATLAS is hurtling through the solar system — and you can watch it live online today

HealthScientists Detect Microplastics In Reproductive Fluids—Potential Infertility Risk

Ancient Inscriptions Inside Great Pyramid Rewrite History Of Its Builders

Once ‘Dead’ Thrusters On The Farthest Spacecraft From Earth That’s 16 Billion Miles Away Are Working Again

OpenAI’s Top Al Model Ignores Explicit Shutdown Orders, Actively Rewrites Scripts to Keep Running

Google Earth Unveils Shocking 37-Year Transformation Of Our Planet

Marine Animal Shows Are Officially Banned in Mexico After Historic Legislative Vote

Denmark Pays Students $1,000 Monthly to Attend University, With No Tution Fees

Protect Your Home and Wallet: Unplug These 5 Appliances When You’re Done Using Them

Some People Still Think These Two Buttons Are Only For Flushing

The Dark Year: What Made 536 So Devastating For Civilization

Elon Musk Claims He’s A 3,000-Year-Old Time-Traveling Alien

The Volume Buttons on Your iPhone Have Countless Hidden Features

10 Safest Countries To Be In If World War 3 Breaks Out

News Post

World’s Smallest Otter Species Rediscovered In Nepal After 185 Years

Why You Should Disconnect Your WiFi at Night And Sleep With Your Phone on Airplane Mode in Another Room

How to Avoid Parasitic Eye Infection Caught by Common Bedroom Habit That Millions of People Do

Experts Issue Dire Warning: Gulf Stream Shutdown May Be Just Decades Away—With Catastrophic Global Fallout

The Growing Threat of Space Debris: Managing Earth’s Crowded Orbit

Doctor’s Warning: Early-Stage Lung Cancer Doesn’t Always Include a Cough – Watch for These 4 Unusual Signs

5 Early Signs of Diabetes That Many People Often Overlook

To Prevent Stroke, Remember the ‘3 Don'ts’ After Meals and the ‘4 Don'ts’ Before Bed — Stay Safe at Any Age

Banana Blossom: The Natural Medicine Everyone Overlooks

Scientists Warn: Most Infectious Covid Strain Yet Is Now Dominating

Say Goodbye to Anemia, Cleanse Fatty Liver, and Restore Vision in Just 7 Days With This Powerful Natural Remedy

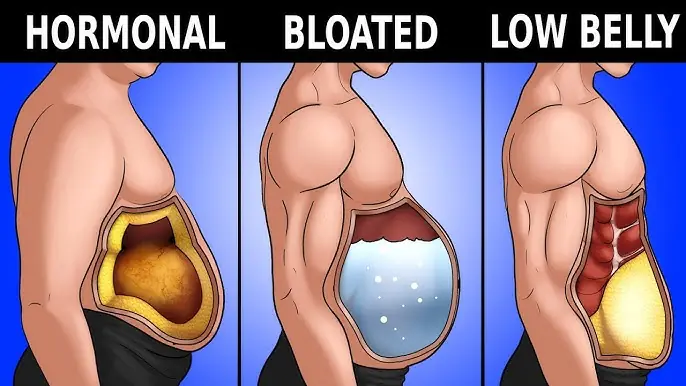

Bloated Stomach: 8 Common Reasons and How to Treat Them (Evidence-Based)

Foamy Urine: Why You Have Bubbles in Your Pee and When to Worry

What Causes Belly Fat: Foods to Avoid and Other Key Factors

Notice These 4 Unusual Signs Before Sleep? Be Careful – They May Signal a Risk of Stroke

5-Year-Old Girl Diagnosed With Terminal Cancer: A Wake-Up Call for All Parents

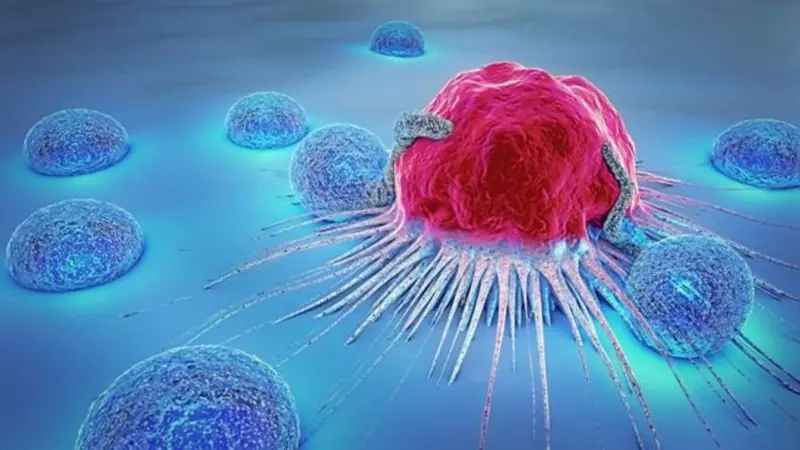

Good News: Successful Trial of Method That Destroys 99% of Cancer Cells

New interstellar comet 3I/ATLAS is hurtling through the solar system — and you can watch it live online today